The big list of capabilities in making music

Breaking down all the actions involved in the music making process (and the power of ranking them by authenticity and creativity).

Today’s paid version of the AI Artistry newsletter is being given to you free.

This post is music-centric, and all about the different capabilities that go into making music. It’s part of something bigger I’m working on called my “AI Map for Music Makers”. I’m pulling from ten thousand hours and hundreds of songs to think deeply about where AI lives in music, and mapping it all out for the good our processes and our sanity.

Sign up to join the waitlist the AI Map for Music Makers

As a musician, you can live under a rock, hiding from ChatGPT and all its sharp-toothed little brothers and sisters. You might know absolutely NOTHING about AI. And it could be because you don’t want or don’t care, But even if you do, you still know one thing:

The line that technology (and by extension, AI) will never cross.

It’s different for everyone and only commercially debatable. I’m sure if you have them, I’d love them.

But what does that actually mean in practice? Well it depends on a few things, including:

The parts of the music making process

How we view the creativity of any given capability

How we value the authenticity of any given capability

That’s a lot, but in this post, we’re just aiming to lay the foundation. Let’s start with how we think about all of the capabilities involved in creating music.

At its core, these are the capabilities of making music (within which we can find little spots for using AI). Whether we’re comfortable using AI in those spots is a personal decision.

These fall into seven categories.

The 7 categories for AI use

We’re looking at all aspects of making music. Everything from:

Idea capture and transformation: How musicians record, transcribe, or convert raw musical ideas into structured, usable digital formats(quickly or otherwise). Anything from voice memos to transcribing a hum.

Lyric and concept development: Crafting, refining, and enhancing lyrics, everything from poetic devices to song concepts, tone, and wordplay.

Melody and musical idea generation: Developing or suggesting melodies, chord progressions, motifs, and musical structures. Giving sketches a more complete musical identity.

Song development and structure: Arranging and even critiquing songs, optimizing structure and flow.

Instrumentation and rhythm support: Creating, humanizing, or enhancing instrumental parts, rhythms, and layers—laying the musical foundation.

Vocal shaping and performance: Modifying or enhancing vocal performances, supporting both technical correction and creative vocal exploration.

Audio engineering and finishing: Ensuring professional quality and polish. In console things like mixing, mastering, cleaning, restoring, and spatializing audio.

You’ll notice this categorization comes in a loosely chronological order. Put ‘em together and it looks like:

Idea capture -> Lyric and concept development -> Melody and musical idea generation -> Song development and structure -> Instrumentation and rhythm support -> Vocal shaping and performance -> Audio engineering and finishing

Sure, if you put five musicians into a room, four of them would fight me verbally for that assertion, but isn’t that what makes music great?

More importantly, we can break these categories further into the core set of capabilities. In other words, the clear actions we take because they’re within the music-making process.

The big list of capabilities (by category)

First, here’s an exhaustive list of all of the capabilities—from capturing early ideas to final audio polish. Each is also defined.

🪶 Idea Capture and Transformation

Voice memo transcription to tagging: Turns voice recordings into text and labels them by mood, tempo, or key for easier organization.

Humming to MIDI conversion: Converts a hummed melody into MIDI notes that can be used for arranging or producing.

Idea to instrument matching: Suggests instruments or sounds that complement a rough sketch or initial idea.

Tempo mapping for live recordings: Creates a tempo grid based on a freeform or live performance to make it easier to build around.

Genre to style classification: Identifies the genre or musical style of a track to guide creative direction.

✍️ Lyric and Concept Development

Lyric suggestion to rhyme assistance: Provides line ideas, rhymes, and phrasing that fit your song’s tone and message.

Lyric sentiment alignment: Checks whether lyrics match the emotional intent of the song and suggests refinements.

Metaphor and imagery generation: Offers poetic language, visual metaphors, and evocative phrases to enhance lyrical depth.

Title and hook generation: Creates catchy song titles or chorus lines to make a track more memorable.

🎼 Melody and Musical Idea Generation

Melody generation from scratch: Creates original melodic phrases based on mood, genre, or thematic cues.

Chord progression suggestions: Recommends chord patterns that support the melody and emotional feel of the song.

Style or genre mimicry composition: Writes music in the style of a specific genre, artist, or time period.

Countermelody and motif generation: Adds secondary melodic lines or motifs to support and enrich the main theme.

Key or tempo experimentation: Suggests alternate keys, time signatures, or tempos to explore different emotional effects.

🧠 Song Development and Structure

Song structure optimization: Refines the arrangement of sections (verse, chorus, bridge) to improve flow and engagement.

Arrangement suggestions: Advises on which instruments and layers to introduce or remove at different points in the track.

Arrangement simplification for performance: Reduces complex arrangements into playable versions suited for smaller setups or live use.

Complete song generation: Creates full-length songs including lyrics, melody, chords, and arrangement.

Automated song critique or feedback: Evaluates a song’s structure, balance, or emotional impact and provides improvement ideas.

🥁 Instrumentation and Rhythm Support

Drummer or backing tracks: Generates drum grooves or complete rhythm sections that match the mood and tempo of a song.

MIDI humanization: Adds natural timing and velocity variations to MIDI sequences for a more human feel.

Loop or sample generation: Produces original musical loops or one-shots for use in beatmaking or production.

Instrument synthesis and design: Builds new instrument sounds or tweaks existing ones for use in recordings or live sets.

Stem separation or isolation: Splits finished songs into individual parts like vocals, drums, or bass for remixing or analysis.

🎤 Vocal Shaping and Performance

Pitch correction or Auto-Tune: Fixes off-pitch notes in a vocal track while retaining style and character.

Vocal harmony generation: Creates harmonies that follow a lead vocal’s melody and key.

Voice synthesis or cloning: Generates vocal performances that replicate or reinterpret an existing voice.

Language translation singing: Renders a sung vocal in another language while keeping melody and phrasing intact.

Real-time performance effects: Applies effects like reverb, delay, or filtering during recording or performance.

Emotional expression modeling: Shapes the tone or delivery of vocals or instruments to convey specific emotions.

🎚️ Audio Engineering and Finishing

Automatic mixing or EQ: Balances levels, stereo placement, and tone across multiple tracks for a clear and cohesive mix.

Mastering assistance: Applies finishing touches to make a song loud, polished, and ready for release.

Noise reduction or cleanup: Removes background noise, hums, or clicks from recorded audio.

Audio restoration: Improves clarity and fixes problems in degraded or poor-quality audio files.

Dynamic range optimization: Smooths volume levels across a track to maintain clarity and emotional impact.

Spatial audio processing: Places sounds in a stereo or 3D environment to enhance depth and immersion.

How does this help us?

When we know everything that goes into the process, it helps us to identify where AI might work for us. But foundationally, it lets us do hell of a lot more than that. That includes self-evaluating the creativity and authenticity of a specific part of the process to identifying how to use AI when deemed appropriate.

Looking at everything through two lenses

Two key emotional factors really dictate our creative engines. They evolve over time but always dictate the creative choices we make versus the ones we skim past. They are: creativity (“Is this about the big blue?”) and authenticity (“How much does this feel?”).

But when it comes to the honest process of music making (ie capabilities), it’s more like:

Creativity: How much room do I have to get wild?

Authenticity: How deeply does it impact authenticity?

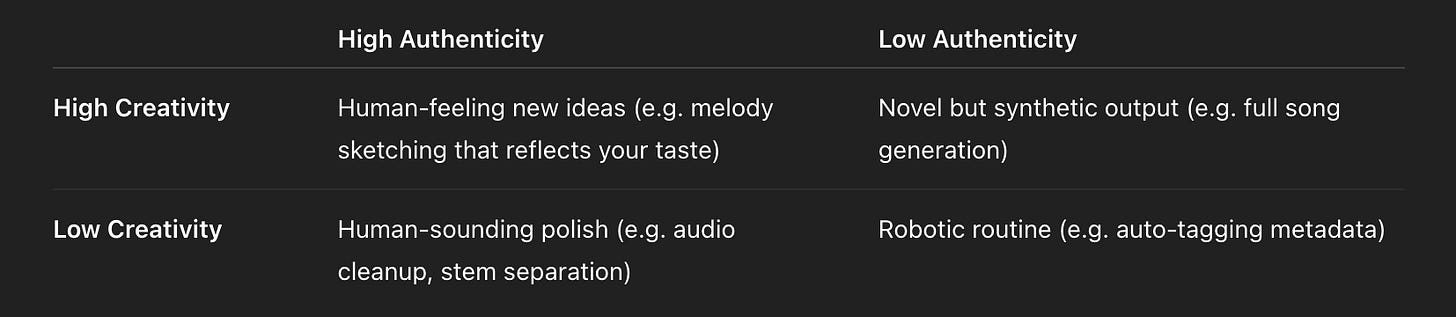

High creativity tasks generate new material: writing melodies, exploring harmonies, or experimenting with genre blends. Low creativity tasks are supportive, like reducing noise or cleaning audio.

Meanwhile, high authenticity refers to the emotional realness. Almost every capability opens the door for us to be authentic. Some, like the nuance of a vocal, just open the door much wider.

How can we think about the authenticity and creativeness within the realm of making music? Use this as your mental matrix:

But beyond understanding the creativity/authenticity, we empower ourselves to make our process better. That’s because…

For any single capability, we can ask ourselves

Like a flashlight pointed at your own creative process, these three questions help you make smart choices about how you work, why you work, and where technology has a seat. You know, all of the things that you want to live and breathe within your music.

Question 1: How am I doing this capability right now?

This question grounds you in your current habits. You can’t optimize or change what you haven’t noticed, and I’m guessing a lot of music don’t think about their process in such a level of detail. But why not start, right?

Take vocal harmony generation as an example. Maybe you’re fumbling through multiple takes to stack harmonies by ear. Or maybe you’re not doing them at all because you don’t know where to start. Asking the question, you recognize that knowledge (or lack thereof) and set a direction.

Question 2: Would I use AI for this part of the process?

This is your filter for identifying your own personal creative boundaries. AI might be available, but does that mean you want to use it? Your answer here helps preserve your voice and intention.

You might say no to melody generation because that’s how you express emotion and you want those to come from you. But noise reduction might just be a time-eating part of the process for you. You’re defining where AI or automation actively serves your process versus hurting it.

Question 3: (If I would use AI for this) what tools or plugins let me do this?

This is the bridge between reflection and betterment. If something’s worth automating or enhancing, you can look for how to do said capability.

Maybe you’ve realized you’re open to help with vocal harmony generation. Now you're Googling “iZotope Nectar”, “Waves Harmony”, or exploring tools in your DAW that you’ve ignored. You're turning awareness into momentum and installing a better process.

Bringing it all together

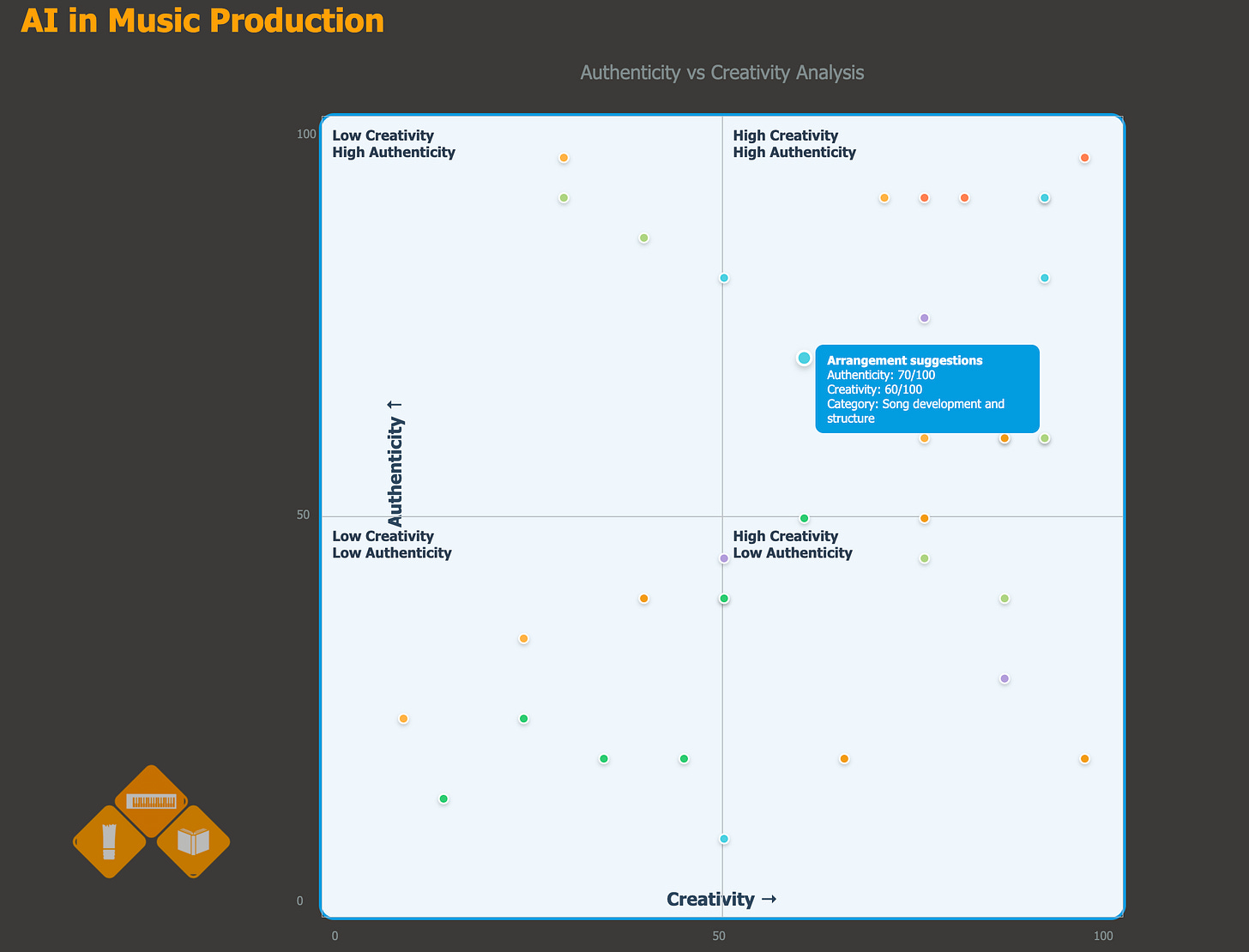

Let’s go back to our list of capabilities, looking at them through the lens of creativity and authenticity.

The best places for AI in music

Even in music, some tasks are just... tasks. Utility tasks that are repetitive or grunt worky. These rank low in both creativity and authenticity, which is exactly why they’re perfect places to look at using AI. Here are the top five:

Noise reduction or cleanup: Cleaning up hiss, hum, or background chatter isn’t an artistic decision — it’s maintenance. Let the machines handle it.

Tempo mapping for live recordings: Lining up a performance with a grid, both tedious and error-prone. Now it's fast and mostly invisible — just how we like it.

Audio restoration: Fixing crackles, pops, and damaged recordings is no one's dream gig. But it’s a dream use case for AI

Mastering assistance: This one’s controversial, but AI can now get your tracks 80% of the way to "release-ready." Perfect for demos or non-perfectionists.

Dynamic range optimization: Balancing the loud and soft parts of a mix used to require finesse. Now it’s a preset. Let it ride.

We can’t compromise authenticity when decisions are objective, the variables are measurable, and there's little room for personal taste.

The worst places for AI in music

The following capabilities score high in both creativity and authenticity, which you might call something of red zone. They’re where AI scrapes away at the very thing that defines us as artists.

Lyric suggestion to rhyme assistance: It’s tempting to outsource your pen, but if every song leans on the same trained rhyming engine, we lose the voice behind the words.

Melody generation from scratch: Melody is emotion in motion. When the machine does it, you’re often left with something catchy but soulless.

Lyric sentiment alignment: It might tell you your words don’t match the mood — but sometimes dissonance is the whole point. Don’t smooth over the rough edges that make a song real.

Complete song generation: Sure, it’s fascinating. But who are you in that track? If you didn’t write, sing, or even guide the vibe, is it still yours?

Song structure optimization: Formulas can’t feel. Just because a chorus hits at the "optimal" timestamp doesn’t mean it lands in the heart.

Anything that gets in the way of your voice (discovered or still discovering). What are the things we discover through the course of the song? Story. Emotion. Rhythm. Flow. Feel.

All areas where we stand to lose the most by bringing in AI.

Want a better understanding of where AI and music intersect?

Join the waitlist to keep tabs on the release of my “AI Map for Music Makers”

Other reads from AI Artistry

Whenever you're ready, here’s how I can help you:

Level up with 1:1 coaching: Get tailored support in prompt design, creative workflows, or AI strategy. Whether you're a writer, leader, or product thinker, I’ll help you use AI to create faster and smarter—without losing your voice.

Grab my Prioritization Power Stack: Not ready for coaching or consulting? Check out the first in my library of plug-and-play prompt packs—a closed-loop productivity system that eliminates busywork.

Book a team training or executive workshop: From half-day bootcamps to role-specific trainings, I help teams unlock practical use cases, establish smart guardrails, and build momentum with clear, no-jargon frameworks.