Stress-testing new AI tools in 30 minutes

How to combine the powers of NotebookLM and ChatGPT to test if a new AI tool is right for you (with personal and meaningful use cases).

Coming to you on a Friday as it’s been a little hectic. My wife and I have been on the move—a literal move from out west (Denver ⛰️) to the “Gateway to the west” (I’ll let you google that one). Thanks for sticking with me!

This is the second of April’s AI Artistry posts this month. If you’re not a subscriber yet, here’s what you’ve missed:

In the ever-expanding universe of AI tools, we’re all the proverbial kid in a candy store. But like we learned from Stinky Pete in Toy Story 2, not every shiny, well-polished toy delivers on its promises. Testing new tools, while a valiant and necessary task, can be a bear.

To that end, I've begun using a streamlined, 30-minute framework to stress-test new tools. I do this to ensure 1) they actually meet a need and 2) that I don’t go down a rabbit hole and lose a bunch of precious time. Below, I’ll walk you through how I chain together two of my favorite apps (NotebookLM and ChatGPT) to test tools in the most relevant way possible. The key word being relevant.

Key Takeaways

Testing new AI tools is essential but can easily lead to wasted time and misspent subscriptions

By providing product resources (videos, feature pages, etc.) into NotebookLM, we can quickly identify core product features and personal use cases for any tool

Being intentional about our use case and critical of red flags lets us stress-test and make informed decisions about new AI tools in under 30 minutes

The need for a critical eye

It’s not overstating things to say there’s a ‘tool du jour’ in the AI landscape. It’s sprawling with apps designed for content generation, workflow automation, image and video creation, and more. With this abundance, it's all the more important to have a discerning eye. Aimless experimentation leads to decision fatigue, poor tool choices, and even wasted subscription fees.

This framework is tailored for individual users seeking to enhance their personal or professional workflows. This method emphasizes curiosity and hands-on experimentation, focusing on real-world time savings and practical benefits.

And when doing so, it helps to have capabilities (not features) in the back of our mind.

A capability is a specific function or aspect of a product that you can interact with directly. It's something concrete that the tool does or offers. A feature, on the other hand, is what the tool enables you to accomplish—the broader potential or power it provides.

Capabilities represent the underlying strengths that make the features valuable, and when self-selecting a tool, it’s the capability we’re after.

Here are a few examples of capabilities, which you’d never see on the marketing materials:

An AI Writing Assistant allows you to do grammar correction

A Data Analysis Tool automates chart generation

A Customer Service AI reads through customer queries and recognizes intent

A Computer Vision AI detects objects in images

An AI Meeting Assistant automates meeting transcription

An Music Composition Tool comes up with chord progressions

A Music Production Tool categorizes and tags samples

Why is this important? Because our workflows are made up of capabilities like these. In other words, this is where we find the overlaps that allow AI to meaningfully augment us and not just be a bloated piece of technology.

And I’ll say it once more: this framework is designed for personal tool selection. Enterprise-level decisions (should) involve a more comprehensive scope with collective input. Here, we prioritize individual exploration and the tangible advantages a tool can offer in day-to-day tasks.

Phase 1 (5 minutes): The preparation phase with NotebookLM

I start by gathering 3-5 resources related to the AI tool I’m evaluating—think product demos, feature pages, and relevant blog posts.

Gather product resources

I use NotebookLM because it’s my favorite tool that allows me to upload my own sources. When gathering resources for a tool, I like to look for:

At least one video (preferably a longer demo)

At least one promotional page (like a home page or a features page)

A central documentation page or two (what you don’t want is too deep a dive on a specific feature)

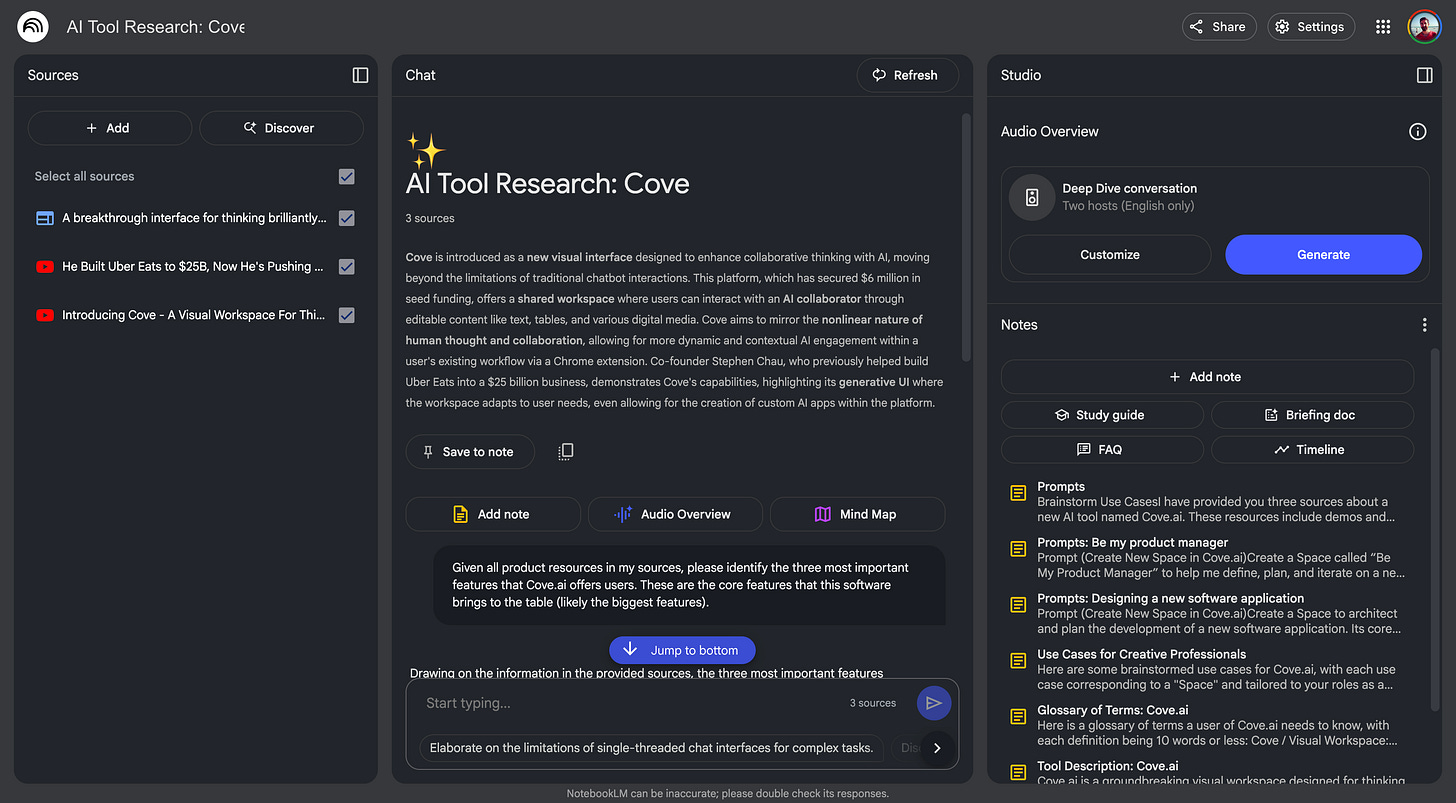

As an example, I most recently used this process to test the tool Cove.ai. Here are the resources I chose to upload to NotebookLM.

Promo Video: Introducing Cove - A Visual Workspace For Thinking With AI

Demo: A Deep Dive where Peter Yang gets a demo from Cove’s CEO

Promotional (Blog): A breakthrough interface for thinking brilliantly together with AI

By importing these into NotebookLM, I have a centralized repository of information about this tool. I’m going to prompt (within NotebookLM) to get all the details I need about the value proposition and feature set of the tool.

Distilling the tool

With these resources at hand, use NotebookLM to distill the tool's essence. I do this through the following series of prompts.

Tool synopsis and value prop

Key features

Glossary of tool’s terms

Best practices for prompting this tool

And because I have my knowledge repo using this tool’s own documentation, I can just feed a series of chats into NotebookLM.

Prompt 1: Tool synopsis and value prop

This short summary will be used in follow-up prompts (specifically when I identify my own personal use case to test this tool with).

Using the product details and resources I've provided, please summarize the value proposition and core functionality of [TOOL_NAME] in three sentences.Prompt 2: Key features of the tool

We identify the three most important features the tool offers so as not to get caught on less core functionality when we’re looking for our use case. These also help to keep the scope of our testing lean.